Within 50 years, there will be artificial intelligence that is indiscernible from a human being. Discuss.

I’m not talking about a Turing test for a computer’s ability to deceive us; tech can already do that really well. Think of a real-life, embodied version of Scarlett Johansson’s character in the Spike Jonze film Her. A programmed artefact that, if we met it in the street, would be so convincing that we couldn’t tell the difference between us and it.

Read more from Aleks Krotoski:

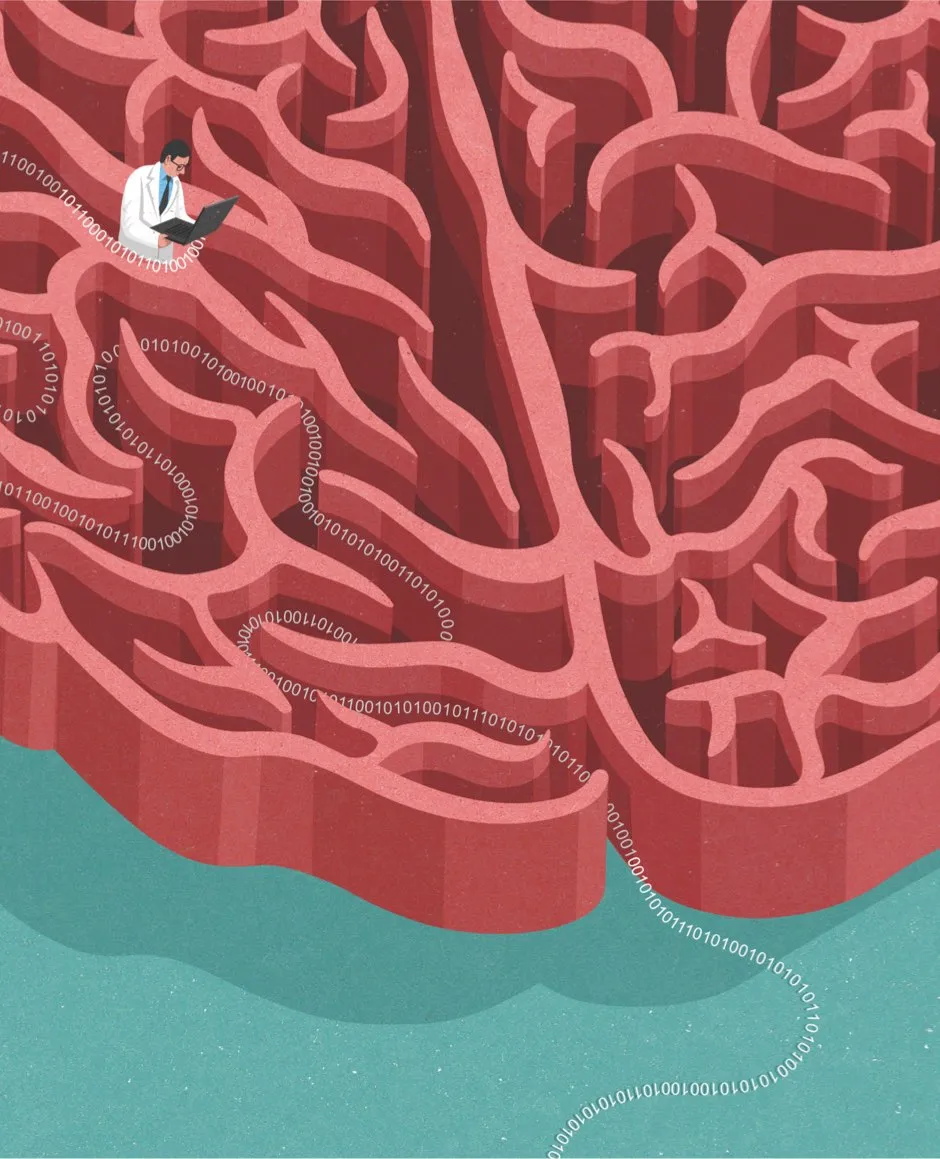

Full disclosure: I don’t believe it’ll happen, and certainly not in the next half century (even if we could make convincing synthetic bodies). I’ve spent too long investigating the complexity of the mind to imagine a computer programmer has cracked our code so quickly. We have too many nuances and contradictions to be reduced to a bunch of 1s and 0s.

And yet, I’ve had to fight my corner. There’s a lot of financial and emotional investment in the idea of a human simulacrum. Today’s ‘mad scientists’ are computer developers, empowered by popular culture and promises of delivering this fantasy.

Sometimes we’re told they’ve done it. Not long ago, the hot ticket was ‘sentiment analysis’: trawling for keywords in our online content to guesstimate whether we’re currently happy or sad about something.

Lately, big data has been hailed as the key to unlocking the essence of humanity. In both cases, as much data as possible is thrown into a bag, shaken up, and, hey presto, we know what you’re going to think and do next.

But in trying to mimic the black boxes of our minds with algorithms, assumptions are being made. One is that the words we use mean what the dictionary says they do; another is that we behave online like we do offline.

Earlier this year, in research published in Psychological Science In The Public Interest, psychologist Lisa Feldman Barrett and her colleagues investigated whether our facial expressions predict our emotions, I’ll sum up: they don’t.

At least, not consistently enough for anyone to base an employment decision, an arrest, or a health diagnosis upon them. And that’s a problem, because these are areas where facial recognition systems are now being deployed, with real-life implications.

The research looked at more than 1,000 studies that tried to link facial expression with anger, sadness, disgust, fear, happiness and surprise. The results were clear: context is more important than what our facial muscles are doing. We might scowl when we’re angry, or when we have stomach ache. We might smile when we’re happy, or because we’re afraid.

So, the new hot ticket – facial recognition – is based upon a flawed premise: that we can take human experience out of context and plop it into an algorithm. And that applies to many technologies of this kind.

We have evolved the ability to extract the signal from the noise. It’ll take more than 50 years of trial and error to duplicate that.

Follow Science Focus onTwitter,Facebook, Instagramand Flipboard