It wasn’t all that long ago that whole rooms would be taken up by just one computer. Now, we have powerful mini-computers in our pockets, super-thin laptops and headsets able to produce complicated virtual realities.

Despite these technologies becoming more powerful and fitting into smaller devices, costs have not sharply risen up, and despite these large leaps forward, technology continues to make advancements each year.

This all might sound quite obvious, but it is all thanks to a theory known as Moore’s Law that was raised almost 60 years ago.

What is Moore’s Law?

Moore’s Law is a theory that was raised by one of the founders of Intel, Gordon Moore (no guesses for where the name came from). This theory originally emerged in a paper published in 1965.

“Moore observed that the number of transistors that could be fabricated on an integrated circuit was doubling every two years or so. He predicted that this exponential process would continue for 10 years, i.e. to 1975,” says Stephen Furber, a leading expert in computing and the ICL professor of computer engineering at the University of Manchester.

“The principal way that Moore’s Law has been delivered is by making transistors smaller. Smaller transistors are faster, more energy-efficient, and (until recently) cheaper, so it’s a win all round. Moore’s Law has been confused with a law about computer performance increases over time, but it is actually only about the number of transistors on a chip.”

In principle, this law sounds very feasible, until you do the maths around it. If, like Moore’s Law suggests, the number of transistors doubles every 2 years, that amounts to a factor of 1,000 every 20 years and a factor of 1 million every 40 years.

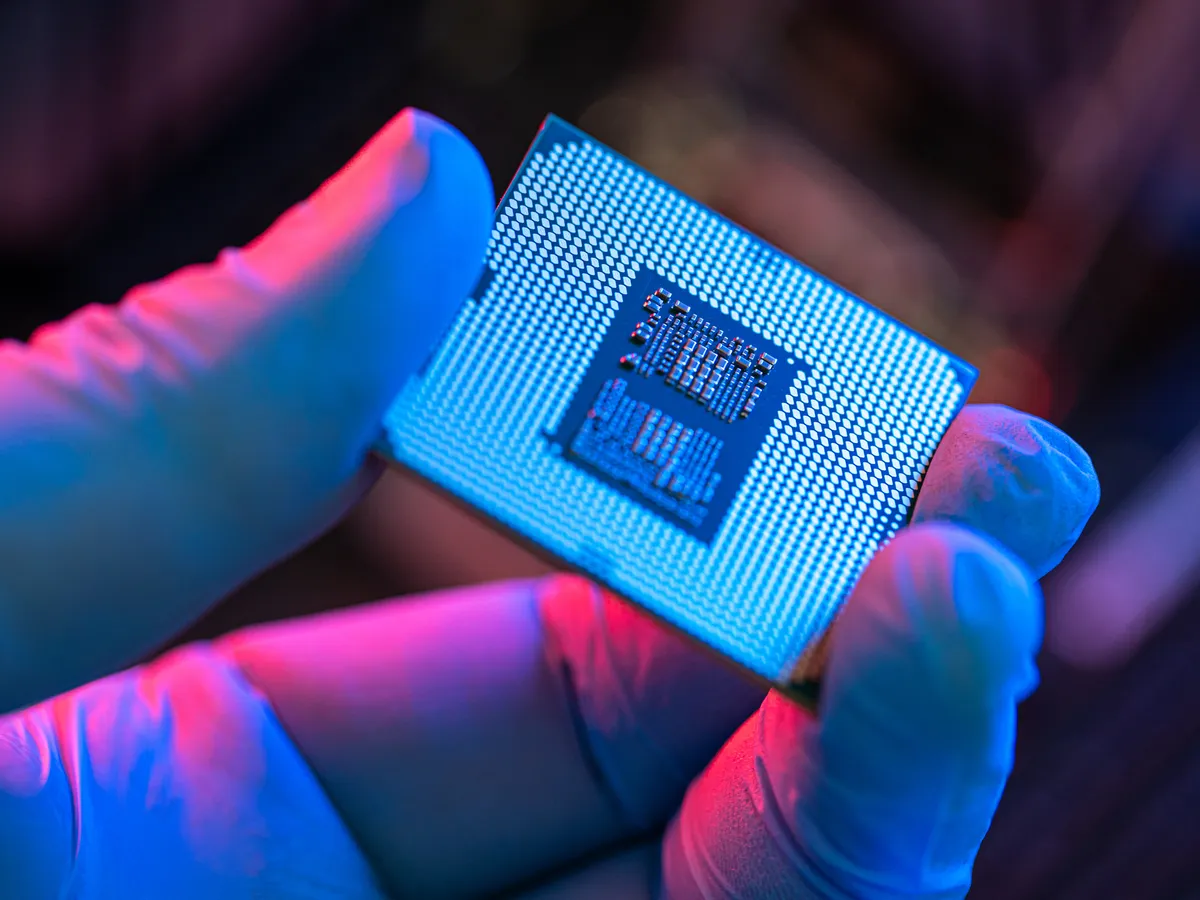

That might seem like big jumps to be making, but trends have actually exceeded the expectation. In 1983, Furber and Sophie Wilson designed a processor known as ARM1. This is essentially the great-this grandfather of modern processors, leading the way for what we have today.

The ARM1 featured 25,000 transistors. While that sounds like a lot in theory, Apple’s 2022 M1 Ultra processor has a ridiculous 114 billion transistors and is used inside a small computer desktop known as the Mac Studio.

Why is Moore’s Law important?

It might sound strange to say that a theory that is almost 60 years old is still so relevant, especially in the rapidly changing world of modern technology. And yet, this theory has changed the way consumer technology has grown.

“For half a century [Moore's Law] has been the driving force underpinning the semiconductor industry, which has delivered the technology required for computers, mobile phones, the internet, and so on. Much of the modern world is only possible because of Moore’s Law and its impact on the plans of that industry,” says Furber.

If we reach a point where Moore's Law is no longer relevant, it will raise the question of what comes next, offering up a stage of ambiguity for the world of processing and computing power.

Is Moore’s Law still accurate today?

Depending on who you ask, Moore’s Law is either continuing to prove accurate and will keep doing this for years, or it is a theory that can no longer stand the test of time as technology develops further.

As we highlighted above, Apple has managed to keep exceeding the theory, even in 2022. But the problem is that the theory faces bigger issues the longer it is used. As Moore’s Law is to do with the number of transistors on a chip, even with technological advancements, it becomes harder to implement each time it happens.

“Moore’s Law is slowing down, and the cost per function benefits of further transistor shrinkage have gone,” says Furber.

“There are clearly physical limits here! There are also economic constraints - getting a 5 nanometre chip designed and into manufacture is formidably expensive, and very few companies have the volume of business to justify incurring this cost.”

That doesn’t necessarily mean that the theory can no longer apply as we move forward. Technological advancement also means new possibilities for the way that transistors are used and applied.

“To date, chips have been largely 2-dimensional. But we are beginning to move out along the third dimension, so maybe the end is not quite as nigh as the 2D physics suggest,” says Furber.

About our expert, Stephen Furber

Stephen is a leading expert in the world of computing. He is currently the ICL Professor of Computer Engeering at the University of Manchester. He was also a principal designer of the ARM microprocessor - a device that went on to be used in most mobile computing systems.

Read more: