When the UK government decided to cancel school exams due to the coronavirus pandemic, they gave examination regulators Ofqual a challenge: allocate grades to students anyway, and make sure the grades given out this year are equivalent in standard to previous years. Ofqual’s solution was to create an algorithm – a computer program designed to predict what grades the students would have received if they had taken exams.

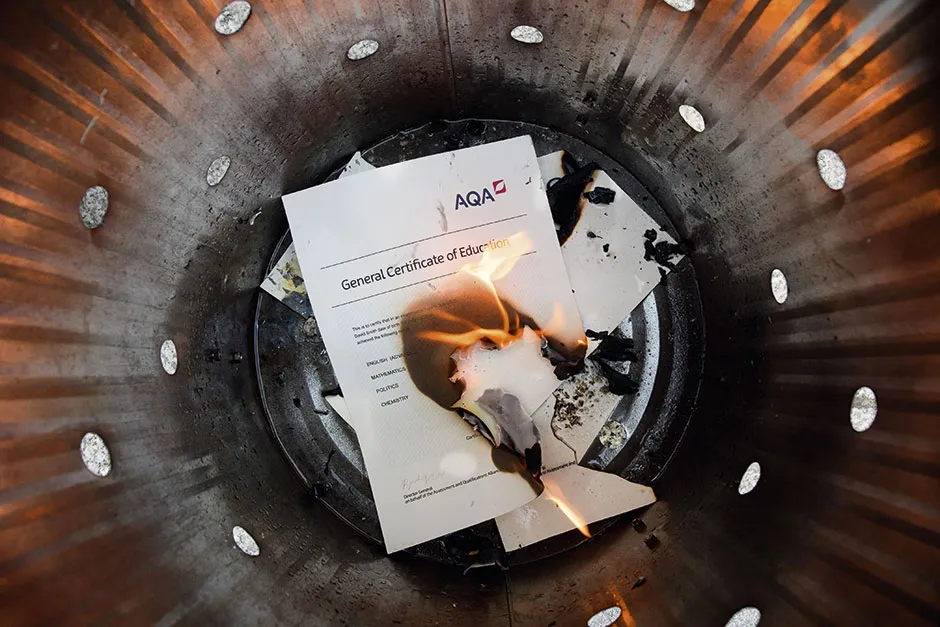

Unfortunately, when the computer-generated grades were issued, 40 per cent of A-Level students got lower grades than their teachers had predicted. Some of them several grades lower. Promised university places were withdrawn. Lawyers offered to take legal action against Ofqual. Angry teenagers took to the streets with placards saying ‘F**k the algorithm’.

Worse, students at large state schools seemed to have lost out more than those at private schools or those studying less popular subjects like classics and law. Faced with such glaring unfairness, the education minister announced that teacher predictions would be accepted after all, but not before universities had filled places on their courses, leaving everyone scrambling to sort out revised offers and over-subscribed courses.

Prime minister Boris Johnson called it a “mutant algorithm”, as if the computer program had somehow evolved evil powers to wreck teenagers’ aspirations. But in fact, Ofqual’s program did exactly what it was designed to do.

Read more about algorithms:

You might have expected the algorithm to start with teachers’ predictions, as they know each student best. But no, any information about each individual student played a minimal role. Teacher predictions, mock exam results and GCSE results were only used when the number of students taking a subject in a school was small – under 15 in most cases. For larger groups, that data was only used to compare the whole group to the school’s results in the previous three years.

Ofqual was instructed to make this year’s overall pattern of results equivalent to previous years’ results, to avoid ‘grade inflation’, and that is what they did. They took an average of each school’s results in the past three years to give an expected spread of that school’s results for this year: how many A*s, As, Bs, and so on.

Next, they used the past performance of this year’s group, compared to previous years, to adjust for any improvement or decline in school performance. But they also adjusted to keep the national distribution of grades similar to the past. That gave the allocation of grades for this year’s subject group. Then, to decide which student got which grade, each school was asked to rank each pupil from best to worst in each subject. The grades were dished out in rank order from best to worst.

Fair and square?

Was Ofqual’s algorithm fair? Well, that depends how you define fair. Ofqual went to great lengths to check that no protected group would do worse under the algorithm than in exam years. Boys, deprived socioeconomic groups, different ethnic groups, all had similar outcomes to previous years, as a category. So why did state school students do worse than private school students?

In years where they take exams, state school students are more likely to be marked down from their teachers’ predictions. They’re statistically less likely to get the highest grades than private schools, which you may put down to poorer resources, lower aspirations from parents or teachers, or a combination of factors.

Whatever the reason, many of those protesting that the algorithm robbed them of their future may have been equally disappointed by the results of their own exam performance. But at least they would have had the chance to show what they could do under pressure.

This year’s students were doomed in advance, their attainments capped by what other students before them had achieved. The algorithm had already decided that your school is a better guide to your potential than anything you do yourself.

This illustrates two problems with algorithms. Their projections are based the past, which means the predicted future will, by default, look like the past. And though they are often good at population-scale prediction, that doesn’t mean those predictions can or should be used for individuals.

Algorithms never predict the future. Humans predict the future, using projections made by machines. It was humans who wrote a program to find the patterns in data collected over time, and then asked it the question, ‘If these patterns continue, what does the future look like?’

Much of the time, this works well. Patterns of traffic flow, for example, repeat themselves daily and weekly. Other factors that change that regular pattern, like school holidays or special events, can be programmed into the algorithm. So can long-term trends, like an increasing population, or more reliance on deliveries from internet shopping.

Each of these decisions is an act of human judgment, based on both evidence and imagination. You could track trends in spending, combined with surveys about people intending to buy more online, and decide that delivery van traffic will go up. But you might also decide to collect data on how much people are driving to the shops, and conclude that reduction in car journeys will offset increased van traffic.

What data you include or exclude from your mathematical model is just as important as the type of computer program you choose to process it. But you can’t collect data from the future. In that way, algorithms are exactly like the real world: the future will only be different from the past if humans decide to make it different.

Algorithms, especially machine-learning algorithms, which is what’s usually meant by artificial intelligence, are good at finding patterns in large amounts of data. Better than humans, in many ways, because they can handle more information and not be distracted by prior assumptions. That’s why they’re so useful in information-rich problems, like spotting abnormalities in medical scans that could be an early symptom of disease.

What they can’t do is understand the real-world context of a task, which means they're bad at causality. An algorithm may find that one thing, studying physics, for example, predicts another thing – being a boy. But that’s a prediction in the statistical sense.

It means that if you pick an A-Level physics student at random, four times out of five you will find a boy. It certainly doesn’t mean that a girl can’t study physics. But it does mean that if you picked a random boy and girl out of a sixth form, the boy would be more likely to be studying physics than the girl.

On an individual level, though, such predictions are inaccurate and unjust. All the science subjects together account for only one in five A-Level entries, so it’s most likely that neither of our randomly picked students is studying physics.

To take a more extreme example, 9 out of 10 murderers are men so, statistically, being a man is a strong predictor of being a murderer. But most men are not murderers, so any man picked at random is unlikely to kill anyone. No man would expect to be convicted of murder without evidence that he intentionally killed somebody.

Algorithms and crime

Though we might expect that criminal courts treat each of us as individuals, algorithms are already used to predict who will commit a crime in future, or where, or who is at high risk of reoffending.

This is an extension of what police officers and judges have always done, trying to prevent crime before it happens, or to keep offenders out of jail if they’re unlikely to reoffend. In practice, though, it is very difficult to separate these predictions from the pitfalls described above: assuming the future looks like the past, and that what is true of a population is automatically true of an individual.

Prof Jeffrey Brantingham, from the University of California, Los Angeles, developed PredPol software to predict the locations of future crimes, based on models that forecast earthquakes. As it used data based on past reports of crime, it tended to send police back to the same communities, to find more crime and make more arrests. Widely used in the US and UK, it’s now being abandoned by some police forces because of doubts about effectiveness as well as fairness.

Other algorithms assign risk scores to individuals to help judges or parole boards decide how likely they are to reoffend. They are based on comparing data on that individual with past populations, and have been challenged in the US courts for their lack of transparency, and for judging one person based on what others have done in the past.

One risk score algorithm, called COMPAS, was found by investigative journalists at ProPublica to be more likely to wrongly assign black offenders as high risk, and white offenders as low risk. In July 2020, 10 US mathematicians wrote an open letter calling on their colleagues to stop working on such algorithms, because the outcomes were racist.

But if the justice system is getting more cautious about using algorithms to make life-changing decisions, others are embracing them.

Employers inundated with job applications are turning to algorithms to filter out unsuitable applicants, based on how they compare with current employees. This has backfired – Amazon’s algorithm, for example, was found to be using a correlation between being a successful current employee and being male, and had to be withdrawn.

Read more about artificial intelligence:

- AI: 5 of the best must-read artificial intelligence books

- Artificial intelligence has a high IQ but no emotional intelligence, and that comes with a cost

Other employers claim that automating the first stage of the hiring process has widened their pool of new entrants. Unilever ditched recruitment fairs at a handful of top universities for online recruitment, where even video interviews were assessed by algorithms before a human saw any candidates. But critics point out that applicants with disabilities, or for whom English isn’t their first language, may be penalised by algorithms assessing their body language or voice.

And algorithms can be gamed. Some recruiters try to make their ads more appealing to women, by using software to make the language of the ad less ‘masculine’. Using the same software, an applicant could make their job application sound more masculine – to an algorithm trained on a largely male workforce.

It’s often claimed that algorithms can escape human bias and prejudice, if we design them for fairness instead of unfairness. It’s true that writing a program can force us to decide what we mean by ‘fair’.

Is it more fair to give exam results that assume your school’s past results are the best predictor of your own attainment, or to give you the grade your teacher predicted? The first will look closer to previous years’ grades, the second gives each individual the benefit of being judged personally. But is that fair on students in better schools, whose teachers were less likely to over-predict?

Can algorithms ever be fair?

In an unfair world, it’s a mathematical impossibility to create a fair algorithm. The company that designed the COMPAS algorithm defended its fairness, pointing out that neither race nor proxies for race were used as data. Instead, they blamed the disparity between the black and white populations.

In the past, a black offender was more likely to be arrested for another offence. This made ‘false positives’ – wrongly assigned high risk scores – more likely in groups that looked, to the algorithm, like previous offenders.

Although the algorithm was blind to the race of the person being assessed, many of the inputs on which it based the risk score would not be found equally in different populations. Details like family or employment history, knowing other offenders, and even attitudes to questions on social opportunity, will differ for people with different experiences of the justice system, and of life generally.

Treating each individual equally gave unequal results on a population scale. To give equal results by population groups would mean adjusting individuals according to race, which is arguably just as unfair in a justice system which should be judging each individual on their own record and character. Whenever a person is judged on the basis of what others like them have done in the past, it’s important to ask in what sense the others are ‘like them’.

The advantage of using algorithms is that, if they are transparent, they force the institutions using them to be explicit about their assumptions and goals. In the Ofqual case, the goal was to make the overall distribution resemble previous years, and the assumptions included ‘your school’s previous performance is a better guide to your future performance than your own grades’.

The trouble with algorithms is not usually the maths. Most of the time, it’s what the humans have designed them to do.

- This article first appeared inissue 356ofBBC Science Focus–find out how to subscribe here