BBC Focusis over 25 years old and over the last three decades we’ve reported on some of the greatest advances in human history, but a few technologies never became a reality. Here’s our rundown of the innovations we hope will become a part of our lives in the next 25 years...

Flying cars

5 YEARS AWAY- You wait 50 years for a flying car, and then three come along at once.

First up isVahana: an Airbus project to develop battery-powered, single-seater aircraft, designed to follow predetermined routes, only deviating to avoid collisions. Swivelling rotors on the wings will let it take off and land without a runway. Prototypes should be flying by the end of 2017.

Second, Dubai recently announced plans to testautonomous air taxisas a way to beat the UAE’s notorious traffic jams. The Volocopter is an electric multi-copter with 18 rotors and a fully autonomous control system. It’s essentially a scaled-up drone with two seats and up to 30 minutes of flying time.

But if you want something more like the airborne cars of 1950s sci-fi (orwhatever we were dreaming upback in the good old days), try Urban Aeronautics’ Fancraft. The Israel-based company wants to fulfil the dream of “an aircraft that looks like the classic vision of a flying car: doesn’t have a wing, doesn’t have an exposed rotor, and can fly precisely from point to point,” says Janina Frankel-Yoeli, Urban Aeronautics’ vice president of marketing.

Earlier flying cars needed runways to take off and land which was, as Frankel-Yoeli says, “not much better than owning a car and an aircraft.” To go from point to point requires vertical take-off and landing, but for decades that could only be done by helicopters or larger aircraft. Urban Aeronautics’ solution is to use light but powerful engines, lightweight composite materials, and automated flight controls. Their ducted fan design – propellers housed in aerodynamic tubes – is powerful but unstable, so the Fancraft would be challenging for a human to fly unaided. Instead, computer-aided control tech takes over the tiny, split-second adjustments required to keep the car stable at speeds of 160km/h (100mph) or more.

But don’t put down a deposit yet. The main obstacle to a sky full of flying cars is regulation. Not only will every aircraft need to pass stringent safety tests, but a new system of air traffic control will be needed to cope with three-dimensional traffic jams above unwitting pedestrians. NASA is already working on that – tests have shown that multiple unmanned aerial vehicles (UAVs) can communicate with each other to avoid collisions. In the meantime, flying cars will mainly be reserved for emergency services and a few VIPs.

The fabled flying car will finally become a reality as the cost of superconducting super-magnets drops

BBC Focus, Summer 2011

Cyborgs

20 YEARS AWAY- In many ways, we are already cyborgs: contact lenses fix short sightedness; cochlear implants restore hearing; prosthetic limbs help athletes to match or even outstrip their natural-bodied rivals; and exoskeletons allow paraplegic patients to walk again.

The next challenge looks to be controlling artificial limbs and senses as instinctively as we do our bodies.

Brain-computer interfaces are the latest focus of Facebook, Elon Musk and US defence research funders DARPA, among others. Other laboratory studies have already allowed patients to control prosthetic limbs via electrodes implanted in the brain. University of Pittsburgh scientists even connected a paralysed man’s sensory cortex to a robotic hand, allowing him to feel what the hand touched. Combining the strength, lightness and durability of today’s prosthetic materials with similar brain control methods would take us into superhuman, bionic territory.

Sensory augmentation is not far behind. Dr Robert Greenberg of US company Second Sight has developed implants that restore vision to blind patients. The company’s Orion device is a retinal prosthetic that uses externally mounted video cameras to relay visual signals directly to the wearer’s brain.

Over 250 patients tried Orion’s predecessor, the Argus II, which translated camera output to optical nerves near the eye. Orion will bypass the damaged eye entirely, sending signals to the visual cortex at the rear of the skull.

“We are restoring relatively crude, but useful, vision to blind patients rather than improving normal sight,” says Greenberg. “Today’s Argus II vision is like a blurry black-and-white television.” Orion should be an improvement, but “colour and higher resolution are in the future.”

While Greenberg is realistic about the current limitations, he’s optimistic that we will eventually be able to restore sight to better-than-normal levels. “There is no physical reason why we can’t create a high-resolution interface someday, but the engineering challenges are great,” he says.

“I would guess we are at least 20 years away from superhuman vision.”

After years of sci-fi dreaming, man is on the verge of becoming a cyber-being

BBC Focus, January 1997

Holidays in space

ALREADY HERE, IF YOU’RE A BILLIONAIRE- No, we still don’t have hotels on the Moon or DisneyPlanet on Mars, but the first paying passengers have enjoyed unforgettable trips to the International Space Station. Now, private companies are racing to make space more accessible to non-millionaires. Virgin Galactic, SpaceX and even Manchester-based Starchaser Industries are testing the hardware that will safely get us there and home again.

Male pill

WITHIN 10 YEARS- Surprisingly, one of the most promising contraceptives for men is extra doses of testosterone, either on its own (doubling natural levels suppresses sperm production) or combined with other hormones. In clinical trials, some drugs have worked as well as the female pill. The main obstacles are delivery (many involve implants or regular injections) and side effects. Men might face mood swings, weight gain or acne as the price for not becoming fathers. Will they swallow it?

Jetpack

10 YEARS AWAY- Ready for your own Optionally Piloted Hovering Air Vehicle? New Zealand-based Martin Aircraft Company has your back. Okay, it’s the size of a small car and uses fans rather than jets, but it has a roll cage, parachute and can stay in the air for half an hour. Sadly, there’s still no firm on-sale date, so you have plenty of time to save up.

There’s a new breed of solo flying machines ready to take off

BBC Focus, January 2014

Mind-reading machines

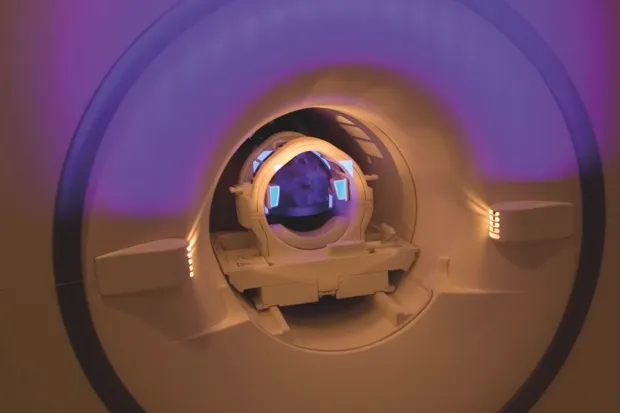

2-10 YEARS AWAY- Knowing what somebody is thinking would be a boon to law enforcement, suspicious partners, or Facebook advertisers. But attempts to match brain activity to specific thoughts have been crude and limited. But Prof Marcel Just, a psychologist at Carnegie Mellon University, has usedfunctional magnetic resonance imaging(fMRI) to scan the brain and identify ideas as they form. His work goes beyond what a word looks or sounds like, to the building blocks of meaning.

fMRI is not usually time-specific. If someone’s brain is being scanned as they form a sentence, the successive ideas in the sentence will be blurred together in the scan image. “The novelty is our ability to separate out the individual concepts of the sentence,” says Just. This means training software to recognise the patterns of brain activity associated with different sentence elements.

In Just’s study, participants lay inside fMRI scanners and read sentences such as ‘The angry lawyer left the office’, designed to include broad concepts like emotion and changes of location. Data from these scans was used to build models of how sentences with similar meanings, such as ‘The tired jury left the court’, would be represented in brain activity. These predictions were consistent between individuals, suggesting that our brains handle these concepts in a similar way.

“We all use the same set of elements, even people who speak different languages,” says Just. “A model trained on data from English speakers can recognise thoughts from Mandarin speakers.”

There are limitations. While broad meanings can be reconstructed from the scans, similar concepts like tea/coffee, fish/duck may be harder to distinguish. Also, the subject has to be completely cooperative, which means it wouldn’t work well as an interrogation technique. And for now it requires an unwieldy and expensive fMRI scanner.

But Just’s team are working on an EEG (electroencephalography) version, which would only need a simple electrode cap to record electrical signals in different parts of the brain. He is optimistic about how soon a workable mind-reading device could be available. “Our grant ends in two years,” he says. “Ten years would be very slow and disappointing.”

It seems like the world inside our heads may never be private again

BBC Focus, September 2008

Robot butlers

ATLAS is a robot created by Boston Dynamics, and designed for search and rescue - but if ever the robot revolution arrives we can understand why…

25 YEARS AWAY- Do you believe that when artificial intelligence becomes smarter than us it will solve all our problems, or wipe out humanity altogether? Either way, AI is a game-changer. But currently, we still can’t create a robot that’s capable of making a cup of tea in the kitchen and then bringing it upstairs (not for want of trying…).

True, while AI is already better than us at playing Go, chess and even US TV quiz show Jeopardy!, Google’s DeepMind and IBM’s Watson are applying their machine intelligence to useful tasks like medical research and aviation safety. But will we ever have AGI – Artificial General Intelligence – that can match all the types of thinking humans do, using language in the same imprecise, contextual way, and adapting to the unpredictability of the physical world and emotional people?

One man who thinks we will is Prof Juergen Schmidhuber, head of Swiss research laboratory IDSIA. His Long Short-Term Memory (LSTM) machine-learning technique is used in Google Voice, Amazon’s Alexa and Facebook translation, and probably in your own smartphone, too.

LSTM is a development of earlier neural nets (NNs). NNs are programs that can find patterns or optimal solutions to problems without being given explicit rules. “NNs are computationally limited in many ways and insufficient for AGI,” says Schmidhuber. He’s been working on advanced deep-learning NNs for over 25 years, and developed the LSTM approach to give his AI a more human-like processing ability. Unlike previous versions, it’s able to hold relevant information until it’s needed, and to ‘forget’ less useful data. In other words, it can prioritise useful information to ‘remember’, and learn by trial and error from its mistakes. It’s had impressive results in sorting images, finding patterns and winning computer games. “LSTM relates to traditional NNs like computers relate to mere calculators,” says Schmidhuber. “It’s become the dominant general purpose deep-learning algorithm, and is now on three billion smartphones.”

Schmidhuber’s former students went on to co-found Google’s DeepMind, and to work for many other big tech companies. Now he’s started his own company, Nnaisense, and he’s hoping to achieve human-level AGI by 2050.

Forget the dishes, leave the laundry and don’t even bother with the guttering. C-3PO is on his way

BBC Focus, September 2008

Quantum computer

AT LEAST 20 YEARS AWAY- As the laws of physics hamper the rush for smaller, cheaper and more powerful microchips, the elusive power of the qubit (quantum bit) grows more tantalising. Caltech scientists recently announced a breakthrough in using light to store data for quantum computing, capturing individual photons in memory modules the size of a red blood cell. It’s another step towards a quantum chip, but a quantum computer fit for the mass market still looks decades away.

Time machine

NEVER. OR SURELY THEY WOULD HAVE COME BACK TO TELL US?- Breakthrough! A physicist at the University of British Columbia has calculated that it is theoretically possible to travel back in history, using the curvature of space-time. By recreating the time dilation that happens near a black hole, says Dr Ben Tippett, we could fold time into a circle. Unfortunately, to do that we’d need a new material called ‘exotic matter’ to bend space-time, and we haven’t invented that yet. Not that it’s the onlyproblem with time travel.

Invisibility cloak

AT LEAST 10 YEARS AWAY- Invisibility is simple: it’s just a matter of redirecting light so it passes right through, or around, the object you don’t want to see. This year, a team from TU Wien achieved this by irradiating an object with a light pattern tailored to its internal structure, enabling them to guide the light through the object “as if the object was not there at all”. So it’s possible in the lab, but we’re still a long way from Harry Potter’s magical invisibility cloak.

3D holograms

25 YEARS AWAY- “Help me, Obi-Wan Kenobi, you’re my only hope.” That iconic hologram of Princess Leia from Star Wars: Episode IV – A New Hope looks fairly primitive, but we shouldn’t judge too harshly. True workable holograms, that use lasers to trap 3D images in 2D planes, are trickier than they look. Yes, you can buy kits and make your own holograms at home, but these are fixed in time, limited in size, and have to be viewed in low light.

Today, our best way of creating a ‘holographic’ image is not a true hologram, but a form of either VR (virtual reality), AR (augmented reality) or MR (mixed reality) that’s viewed throughspecial headgear.

Microsoft’s HoloLens, for example, projects the virtual objects onto a glass visor, using an array of sensors to orient the image to your real position. Other companies are working on similar solutions, with the goal of integrating the 3D image into what’s really there. You’ll be able to point, draw and move the virtual images, just like Tom Cruise in Minority Report. You could even project yourself into an office thousands of miles away.

You’re probably thinking, “Fine, I don’t care if it’s not a real hologram, if it lets me interact with absent friends and workmates, then I’m in!”

But if you’re a hologram purist, don’t despair just yet. Making a true hologram, like taking a photo, involves recording the light bouncing off an object so that an image of the object can be reconstructed. Holograms use laser beams, creating interference patterns to give the 3D effect, but this requires some expensive and elaborate technology. It’s tricky to do, and still the results aren’t great. But researchers at the Technical University of Munich, led by Dr Friedemann Reinhard, have found a way to overcome the hurdles. Instead of using lasers, the team created holograms using radio waves emitted by a standard Wi-Fi router. The images are blurry (so don’t expect any mini Princess Leias anytime soon), but recognisably show the shape of the original object. And Reinhard points out that, since Wi-Fi signals can pass through walls, their technology could allow us to see inside closed rooms, with obvious implications for security and privacy. Maybe you’ll finally find out what your teenager gets up to (if you’re sure you want to know).

Read more:

- What are NFTs? Everything you need to know

- Future tech: The most exciting innovations from CES 2022

- 85 cool gadgets: Our pick of the best new tech for 2022

The day may yet come when nothing will be guaranteed solid to the touch

BBC Focus, January 1995

This is an extract from issue 315 of BBC Focus magazine.

Subscribe and get the full article delivered to your door, or download the BBC Focus app to read it on your smartphone or tablet. Find out more

[This article was first published in December 2017]